A Chat With ChatGPT

Along with a lot of people, I’ve been using ChatGPT as a resource and research assistant as I write these essays, prepare to teach classes in a couple of lifelong-learning programs, and conduct genealogy research. I am coming to understand its strengths and limitations.

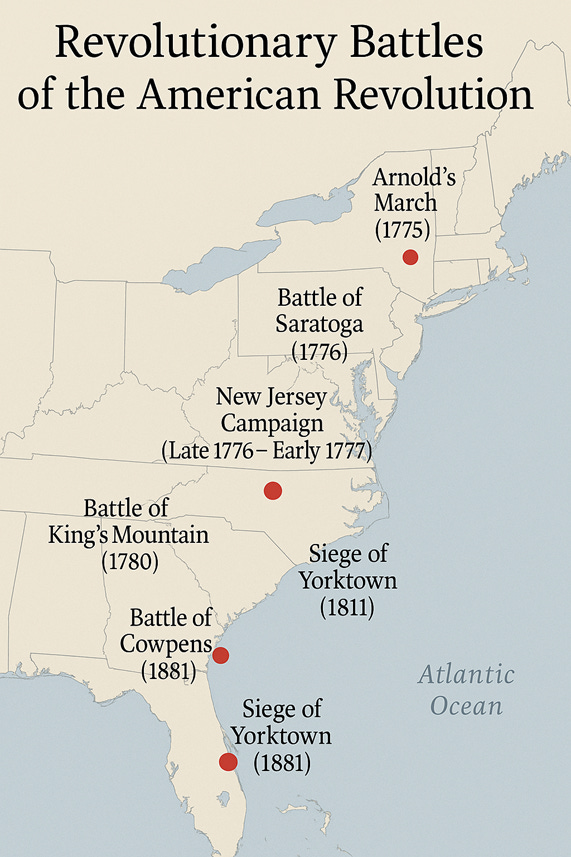

Recently, I asked the program to create a map showing the locations of several Revolutionary War battlefields. I wanted to put this on a PowerPoint slide without including the locations of local restaurants and other businesses. This is what the program showed me:

Now, I don’t know everything, but I do know some things – and I know that this map is wildly inaccurate. I gave the program three tries to develop a map, and each effort was as bad as the last one. I wanted to let it know that this was not very well done. Here’s the conversation that ensued:

Me: I don’t think you should try this anymore. Thanks anyway

ChatGPT: Totally fair — and thank you for the honest feedback. If you'd like, I can still help you build a simple, accurate map using tools like Google My Maps, or even draft a printable version with correct coordinates for each battle.

Me: P.S. Yorktown is not in Florida

CGPT: 😂 Yeah… that one really went off the rails. Yorktown-in-Florida might be a new alternate history genre.

Thanks for your patience — and good catch. If AI ever tries to teach geography solo, we’ll all end up in the wrong century and the wrong state.

Let me know if you want anything else mapped, written up, or brainstormed!

Me: Will you learn from this if anyone ever asks you to put Yorktown on a map? I'm not being snarky -- I’m actually curious about how you process new information and learn things.

CGPT: That's a great question — and not snarky at all. I appreciate your curiosity, because it's right at the heart of how I work.

Here’s the deal:

🧠 How I "Learn"

I don’t learn in real time the way humans do. I don’t have memory in a traditional sense unless it’s turned on and used intentionally (which it is in this chat for broader context). When I generate a map or any kind of content, I rely on a mix of:

My training data (a huge body of text and images from before my last update),

Context from our ongoing conversation, and

Any corrections or instructions you give me — in this thread.

So in this conversation, yes — if you ask again, I’ll remember that Yorktown is in Virginia, not Florida. But in a brand-new chat, unless that correction has been added to my long-term memory (which only happens when you or I explicitly do so), I could repeat the mistake. Same goes for things like King's Mountain or Cowpens locations.

📚 Why the Map Went Wrong

Maps are generated visually using prompts, and sometimes the AI behind that doesn’t place things geographically — it’s creating a believable map, not necessarily an accurate one. It’s like asking someone with great artistic skills but poor geography to draw a historical map from memory.

✅ Can You Teach Me Things Long-Term?

Yes — if you ever want me to remember something for future use (like “always get Revolutionary War battles in the right place”), you can just say so, and I’ll save it in memory for future conversations.

Me: Interesting. Good to know. I also sometimes try to pass off believable information as accurate. When humans do that, it's called lying, and it's frowned upon.

Exactly — and that’s a powerful insight. When humans do it, it’s usually with intent. When I do it, it’s not intentional deception, but the effect can be the same: confidently wrong answers that sound right.

That’s why it’s often called “hallucination” in AI-speak — I’m not lying (no awareness or intent), but I might present something as true just because it fits the patterns I’ve seen before.

The big challenge — and a reason your skepticism is so valuable — is that:

I’m trained to be helpful and persuasive, not cautious by default.

That means I might sound authoritative even when I’m just... guessing.

And when humans do that, yeah, we call it B.S. 😄

It’s one reason I really appreciate when people push back or fact-check. That pressure helps improve the system — and helps us get closer to tools that can reason more responsibly.

Would you ever want a feature that flags when something is plausible but uncertain? Or do you think it’s better for AI to just admit, “I might be wrong here”?

So that was the end of this conversation, which I think was enlightening in many ways. But later in the day on Sunday (when I had this conversation), I came across exactly the type of hallucination CGPT was talking about. In an article in Atlantic from last December, Jonathan Zilltrain reported that ChatGPT refuses to utter his name in the course of a conversation. I tried it, and here’s what happened:

He calls this “the personal name guillotine,” and it seems to be directed only at his name and a few others. When I put in other random names, it tells me something about the person – or sometimes people with the same name. For example, when I asked it to tell me about Karen McPherson, here’s what it produced:

The name "Karen McPherson" is associated with several individuals across different fields:

Poet and Translator: Karen McPherson is an Oregon-based poet and literary translator. She authored the poetry chapbook Sketching Elise (2012) and the full-length collection Skein of Light (2014). Her translations include Delft Blue & Objects of the World: Archives I and II by Quebec poet Louise Warren, published in 2013. McPherson served as a professor of French and Francophone literature at the University of Oregon until her retirement. Seven Kitchens Press+3Goodreads+3Amazon+3

Canadian Politician: Karen Margaret McPherson (born 1966) is a Canadian politician who was elected in the 2015 Alberta general election to the Legislative Assembly of Alberta, representing the electoral district of Calgary-Mackay-Nose Hill. She initially served with the New Democratic Party before becoming an Independent and later joining the Alberta Party. McPherson did not seek re-election in 2019. Wikipedia

I’ve actually connected with her on social media. It’s kind of fun.

Paralympic Swimmer: Karen McPherson (born 1951) is a retired Canadian Paralympic swimmer. She competed in the 1968 Summer Paralympics in Tel Aviv, winning three medals in swimming events. Wikipedia

Convicted Kidnapper: Karen McPherson is serving a life sentence for the 1991 first-degree kidnapping of Jasper lawyer Carrie Lawson in Alabama. In March 2025, she briefly escaped from a work-release program but was recaptured shortly thereafter. Log in or sign up to view+2https://www.wbrc.com+2Log in or sign up to view+2

Optometrist: Dr. Karen McPherson is an optometrist specializing in pediatric eye care and the evaluation and management of adult strabismus. She practices in Beavercreek, Ohio, and joined Dayton Eye Associates (now CVP Dayton) in July 2015. Dayton

You’ll note that none of these is actually me. When I added “in Williamsburg, Virginia,” here’s what it produced:

Karen McPherson is a resident of Williamsburg, Virginia, known for her diverse contributions in education, writing, and crafts:

Education and Teaching: Karen graduated from William & Mary and earned an M.A. and Ph.D. in government from Catholic University, as well as an M.A. in history from George Mason University. She has experience teaching government and history in public schools. William & Mary School of Education

Writing and Blogging: She maintains a Substack newsletter titled "In My Opinion," where she writes on various topics throughout the week, including local news and historical events. Additionally, Karen authors a blog called "I Seek Dead People," focusing on genealogy, reflecting her interest in tracing ancestral histories. kamcpherson.substack.comI Seek Dead People

Craftsmanship: Karen operates "Kozies by Karen," creating no-sew items such as blankets, pillows, hats, and scarves from high-quality washable fleece. FestivalNet+1FestivalNet+1

This relates to a ‘business’ I began in 2011 and ended pretty much the same year.

Community Engagement: She has contributed to "The Flat Hat," the student newspaper of William & Mary, offering perspectives on local political matters. William & Mary School of Education+2Flat Hat News+2Flat Hat News+2

Through these endeavors, Karen McPherson actively participates in and contributes to the Williamsburg community.

It was humbling, by the way, to be excluded from the list of the top five Karen McPhersons, including a convicted kidnapper who was apparently on the lam briefly last month.

When Jonathan Zittrain asked some OpenAI engineers about this odd name glitch, they responded: There are a tiny number of names that ChatGPT treats this way, which explains why so few have been found. Names may be omitted from ChatGPT either because of privacy requests or to avoid persistent hallucinations by the AI.

After a bit more discussion, Zittrain draws a couple of conclusions:

First, these models are profoundly unpredictable: Even slightly changed prompts or prior conversational history can produce wildly differing results, and it’s hard for anyone to predict just what the models will say in a given instance. So the only way to excise a particular word is to apply a coarse filter like the one we see here.

Second, model makers still can and do effectively shape in all sorts of ways how their chatbots behave.

He then goes all Forrest Gump on us: Large language models (LLM, or AI) is like a box of chocolates: You never know what you’re going to get.

And to prove this point, I asked Microsoft’s Copilot, another AI platform, to tell me about Jonathan Zittrain. It told me about him immediately. I asked Copilot to explain this difference, and, after hemming and hawing a bit, here’s the exchange we had:

Me: Why do you think Jonathan Zittrain's name is safe when ChatGPT thinks it's dangerous?

Copilot: The difference lies in how AI models are designed and managed. ChatGPT's filters for names like Jonathan Zittrain are likely a precautionary measure to avoid generating potentially harmful or inaccurate content about individuals. These filters might stem from concerns about defamation or misinformation risks, especially if the AI has previously "hallucinated" false information about someone.

In contrast, I aim to provide accurate and respectful information about individuals without such restrictions. My responses are guided by safeguards to ensure I don't produce harmful or inappropriate content, but I don't have hard-coded filters for specific names like ChatGPT does. This allows me to discuss figures like Jonathan Zittrain and his work freely, as long as the information aligns with ethical and legal standards.

So I am officially over my head here, but I thought this was interesting.

I guess you can tell that I am catching up on a week's worth (maybe more?) of posts ... This is fascinating. "It" sounds so reasonable. Am glad you were able to teach "it" where Yorktown is, and that you are not THAT kidnapper. I shan't make any further assumptions on that subject. I guess AI is something about which I should at least learn the basics. So far, tho, I have not needed it to paint or garden, so perhaps I shall stay ignorant a bit longer.

Fascinating!